Post-Doctoral Research Position (UCL or Bath)

PDRA Positions Available

We have a 12 month position available - please see below for the three different project suggestions!

Although I have moved to University College London, I retain one day a week at the University of Bath with a sole focus on the MyWorld Project. There is an opportunity to be based at UCL if that is preferred but some time will be required at the CAMERA studio (dependent on the needs of the project).

Please apply online via the University of Bath portal (the deadline for applications is 5th August 2025).

Background

As part of the £35m MyWorld programme, we are searching for a motivated individual to create the next generation of content capture and creation tools for the creative industries across film, TV, games and immersive Virtual and Augmented/Mixed Reality. This work will make use of the latest advances in Machine Learning technology, e.g. generative AI, trained on datasets of world-leading quality obtained via our unique CAMERA Studio facilities.

Work will be interdisciplinary and collaborative and will span research across computer vision, computer graphics, animation, machine learning and large language models. Candidates can come from a background in any but should be motivated to expand and learn techniques and theory from across the disciplines; all areas will build on firm foundations in maths and programming.

CAMERA is an established research centre with a track record in the fields of motion capture, analysis and work with the creative industries. We have a strong track record of supporting the career development of our researcher including four former research associates now with faculty positions. Our intention is to help support and develop the future leaders of the field and there will be many opportunities to showcase and champion the new research and technology developed on a global stage. We also hope that you will help advise the CAMERA project and PhD students working on aligned topics.

Projects

We have one position available targeted across a range of activities but there is great scope for input in specific projects from candidates and the project is intended as a collaborative undertaking alongside our MyWorld team at the University of Bristol.

We have three project suggestions (please see the contact details at the end of each project and feel free to contact the specific collaborator):

1) Beyond SMPL: Building Anatomically Accurate Digital Human Models

While parametric body models like SMPL have revolutionised 3D shape and pose estimation, their low-resolution surface parametrisations and simplified representation of skeletal structure results in inaccurate predictions of human or animal motion, limiting their use in downstream tasks such as biomechanical analysis. This motivates the development of more expressive representations that capture the interplay between high-resolution surface geometry and anatomically accurate internal structures.

Links for background information: SMPL and SKEL

Technical Background

This role is ideal for someone passionate about advancing next-generation digital humans and animals, and excited to work with rich, real-world 3D data acquired using our cutting-edge motion and volumetric capture facility. You’ll tackle challenging problems at the intersection of deep learning, 3D computer vision, and mathematics to train and evaluate deep learning models. Thus, we are looking for candidates with a proven track record in developing novel architectures, ideally utilising Transformer and Diffusion based approaches. Knowledge of Pytorch and/or JAX is essential, while a familiarity with C++ and CUDA for GPU-accelerated computing and knowledge of OpenGL/Metal is an advantage. A solid mathematical foundation is also highly desirable, with topics such as differential equations, differential geometry, continuum mechanics and geometric measure theory being particularly relevant to our work.

For further details, please contact Prof Neill Campbell.

2) Next-Generation Virtual Production with Light-Fields

Create the future of filmmaking! Help develop cutting-edge light-field technology for virtual production—used in hits like The Mandalorian and House of the Dragon. Work with hardware and industry giants like Foundry and DNEG to revolutionise on-set lighting and elevate realism for both the audience and performers.

Help Shape the Future of Film & TV Production

Virtual Production combines real-time game engines and giant LED screens to create immersive film sets — used in shows like The Mandalorian and House of the Dragon. This project pushes the boundaries by developing light-field technology to improve on-set lighting realism for actors and audiences alike. You’ll work hands-on with cutting-edge virtual production screens, with access to top-tier hardware and expert guidance from industry leaders like Foundry and DNEG. An exciting opportunity to drive innovation at the intersection of film, technology, and visual effects.

Links for background information: Behind-the-Scenes Look into the Virtual Production of 1899, DNEG Introduction to Virtual Production and DNEG 360

Technical Background

This is an ideal role for anyone passionate about applying advanced computer science, AI, and machine learning to the world of film, TV, and games. You’ll work at the intersection of computer vision, graphics, and 3D rendering, developing next-gen virtual production tools.

We’re looking for someone with strong programming skills in Python, plus experience with C++ and CUDA for GPU-accelerated computing. Familiarity with game engines like Unreal or Unity is a big plus, as they power most Virtual Production stages.

You’ll tackle exciting challenges in areas like light-fields, real-time rendering, volumetric lighting, and 3D computer vision—contributing to the future of immersive media.

For further details, please contact Prof Neill Campbell.

3) Creating data-driven 3D human representations that combine the fidelity of implicit models with explicit controls preferred by animators

This project addresses the challenge of creating data-driven 3D human representations that combine the fidelity of implicit models with explicit controls preferred by animators. Current parametric models lack detail, while implicit methods lack direct manipulation. We aim to use a single “in-the-wild” video to learn a controllable 3D representation for an individual by understanding the latent space of implicit representations.

Problem Statement

Effectively modeling humans in 3D for animation and other real-world applications presents significant challenges. Current approaches often fall into two categories, each with its own limitations. Parametric models, such as SMPL-X, offer explicit controls that are highly valued by animators, allowing for precise manipulation of human pose and shape. However, these models often lack the fidelity required for realistic representations, appearing somewhat generic or simplified. Conversely, recent advancements in human representations obtained through implicit representations such as diffusion models and flows, have shown promise in generating highly detailed realistic 3D human models. The primary drawback of these implicit representations is their inherent lack of explicit controls, making it difficult for animators to directly manipulate or animate the generated human models in a practical way.

The core problem this project addresses is the need for a 3D human representation that combines the high fidelity of diffusion models with the explicit controls crucial for real-world tasks and animation workflows. Furthermore, achieving this ambitious goal requires the ability to derive such a controllable and high-fidelity 3D representation from minimal input, ideally a single “in-the-wild” video of an individual. This necessitates a deep understanding of the latent space, enabling the extraction and manipulation of features that correspond to intuitive controls while maintaining realism.

For further details, please contact : Dr Vinay Namboodiri.

The CAMERA Studio

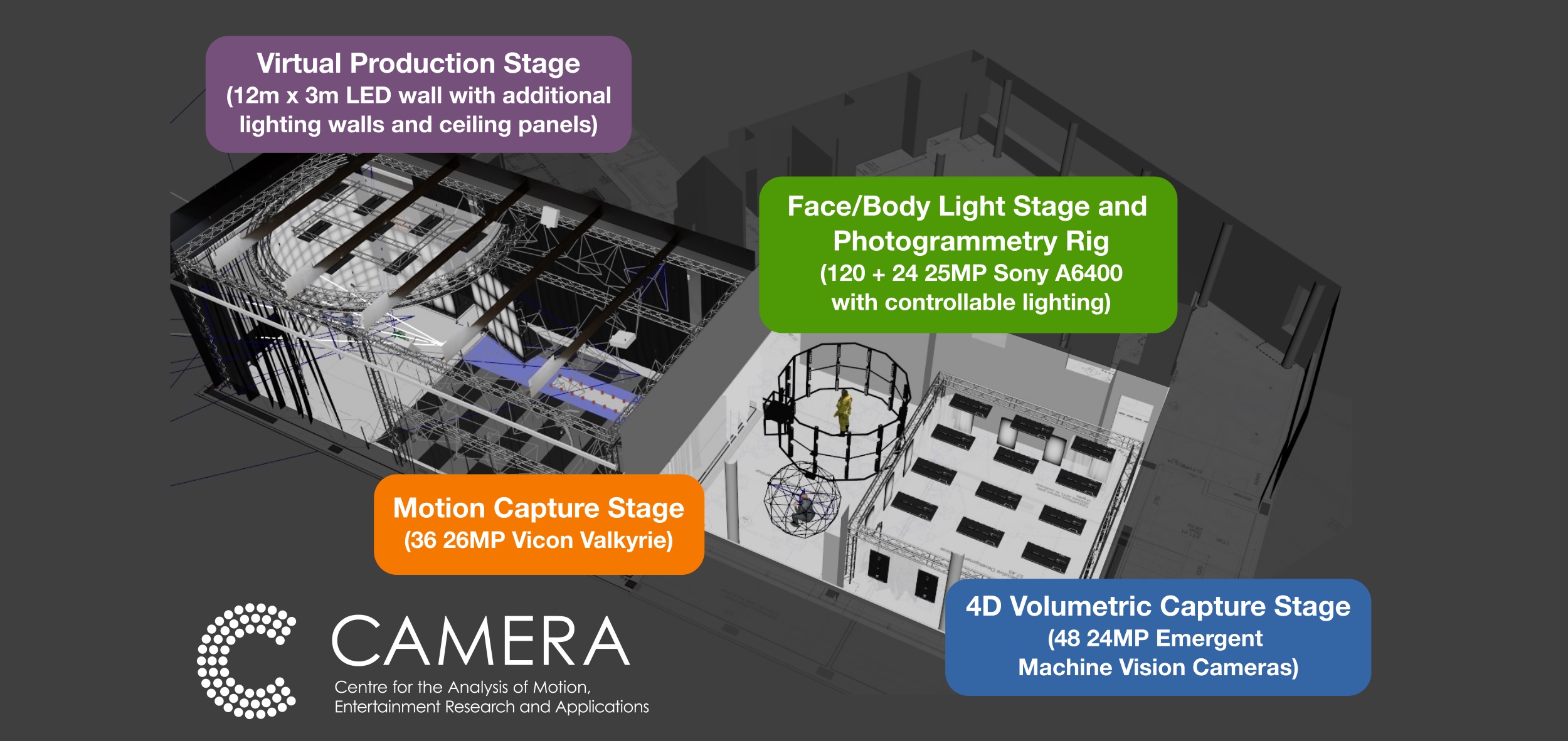

As part of our recent expansion we are very excited about the opening of the new CAMERA studio which uniquely comprises four state-of-the-art capture stages as illustrated above:

- Motion Capture Stage: Our marker based motion-capture facility is centred on 36 26MP Vicon Valkyrie cameras that can be used to capture highly detailed movement (e.g. individual fingers) as well as group interactions (many participants) in the large capture volume. We are interested in capturing truly realistic motion: this could be for training or validating markerless capture systems or biomechanical analysis. We have force-plates to capture ground-reaction forces and suspension harnesses to simulate constrained motion (e.g. movement under Lunar or Martian gravity).

- Face/Body Light Stage and Photogrammetry Rig: The face capture system comprises a light-stage from Esper with 25MP cameras and high-speed lighting control. The photogrammetry rig allows us to perform body reconstructions, e.g. for creating avatars. Combined, they allow us to capture cinematic quality datasets, e.g. to investigate training data for automatic avatar creation and rig construction suitable for high-end visual effects.

- 4D Volumetric Capture Stage: This new room scale stage allows for free-form 4D capture or lightfield capture using 48 24MP Emergent machine vision cameras. This will allow capture of high-quality, dynamic datasets and we are interested in using new view synthesis to provide virtual camera paths as well as new methods for artistic control and editing of volumetric data (e.g. allowing NERFs or similar to be used in immersive AR/VR productions or in visual effects).

- Virtual Production Stage: This brand new 12m by 3m LED wall will allow us to develop the next generation of capture and editing tools for media production. After initial success with projects such as ILM’s work on the Mandalorian, there is an increasing demand for virtual production. Access to a state-of-the-art background wall plus movable front lighting walls and ceiling panels will let us work on the next generation of production and visual effects pipelines. We are particularly interested in generation of the highest quality capture, e.g. recreation of lightfields for lighting accuracy, and to provide the most intuitive creative control for the director, actors and crew.

You will work alongside the six members of our excellent studio team who have long-standing experience across both technical and creative/artistic aspects of the studio. Collaboratively, they will assist with capturing exciting and challenging new datasets or showcasing our research with internationally leading studios and award-winning creative teams (e.g. DNEG and Aardman Animations).

Join a World-Class Research Team Driving the Future of Creative Technology

You will work alongside existing PhD Students and PDRAs at both University College London and the University of Bath, as well as members of our CAMERA studio team who have long-standing experience across both technical and creative/artistic aspects of the studio. Collaboratively, they will assist with capturing exciting and challenging new datasets or showcasing our research with internationally leading studios and award-winning creative teams (e.g. DNEG and Aardman Animations).

You’ll be based at the cutting-edge CAMERA Studio, collaborating with a dynamic and growing team of researchers. Supported by the expert CAMERA Research Engineering team — bringing deep hardware, software, and creative know-how — you’ll also join a vibrant research network through the MyWorld project. Work alongside fellow Postdoctoral Researchers, PhD students, and leading academics from the University of Bath, University of Bristol, and UCL’s renowned 3D Computer Vision Group. Be part of an inspiring community at the forefront of Visual Computing, AI, and Machine Learning.

CAMERA partners with the world’s top external collaborators to bring new technology from the lab into real-world production studios. Our work consistently features at the most prestigious conferences in Computer Graphics (SIGGRAPH) and Computer Vision (CVPR, ICCV, ECCV), giving you a platform to shine at the forefront of your field.